Magento Robots.txt and Sitemap: Everything You Need to Know

-

Maria Tkachuk

Maria Tkachuk

- Magento 2

- 6 min read

Excellent Search Engine Optimization (SEO) is important for all online content starting from blogs to e-commerce shops.

It is vital for business owners and startups to be at the top of the results page.

If your beautiful and structured site gets high ranking in browsers, it will constantly drive more traffic to your website.

Google, Bing, Safari, and other browsers possess bots that index the online network to appear in the top-tier outcomes. Here, Robots.txt and the Sitemap files are a part of the settings that can drastically increase the number of visitors attracted to your platform.

Your business can make more sales when you utilize them properly. You can also hide private data and avoid scammers crawling on important web pages.

Approximately 25,000 US websites depend on Magento, so let’s check out the Robots.txt in Magento as a useful tool to monitor the visibility of the pages where the Sitemap directs what the bot-crawlers index. Detailed information regarding Robots.txt for Magento and Sitemap files have been provided below — dive right in!

Definition and Functionality

Robots.txt is the file that most sites utilize, and it contains instructions for the crawlers. The file helps define whether or not your web platform is accessible to users. It also determines which pages to index and which ones to shield.

You may halt certain webpages from appearing in search engine results or withdraw the blockage completely. You can test your website for the presence of this file by using Google’s special tool. How to add Robots.txt in Magento 2? Let’s keep reading!

Sitemap is a map of your site created for crawlers. An XML Sitemap contains links to the pages that users should see when they open your website. An HTML sitemap, on the other hand, is a guide for visitors to go through the web pages. There can be over a hundred web pages to a single Magento 2 web platform. Therefore, it is essential to direct the crawlers towards the right ones.

IMPORTANT NOTE

You may also add images to the Sitemap to increase the traffic flow as more and more people search online at present using pictures. If you're interested in "how to configure the Sitemap in Magento 2", read the rest of the article below.

Magento 2 Robots.txt File Configuring Ways

Configure the Magento 2 bot folders at the base of the website to suit your requirements. There are only 2-3 things that need to be filled in this section. Look at the guide below to make modifications in search engine robot files in a less-confusing manner:

1. Log in to your Magento admin panel.

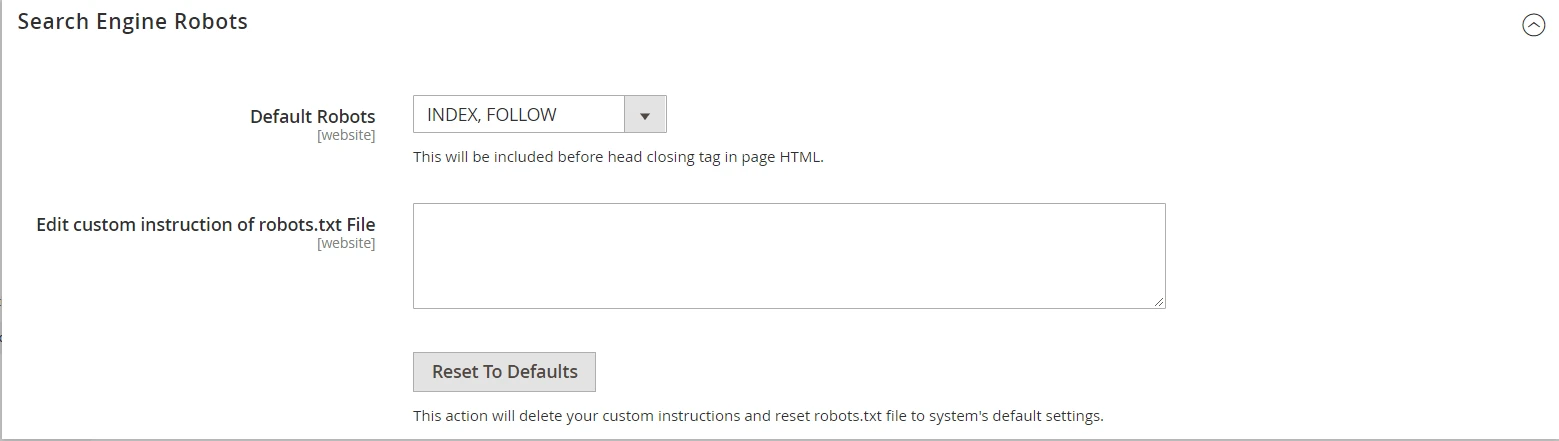

2. You will find a panel on the far left. Click on Content > Design> Configuration, select a store, and finally, Search Engine Robots will pop up. Enter that tab.

3. There, you will find 2 tabs.

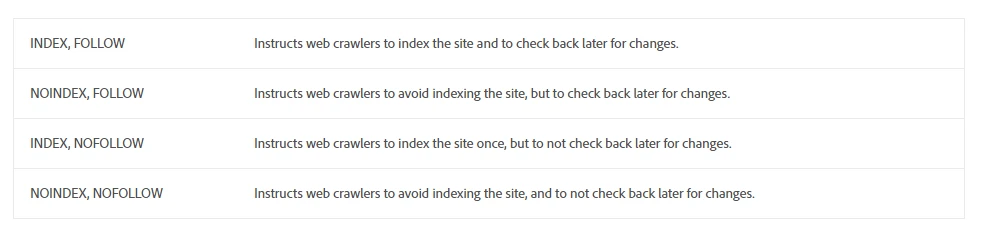

4. The Default Robots allow the owners to take control of whether the crawlers can access the files so that they emerge in the viewer’s findings by the internet and whether they can follow-up with any enhancements in design. The picture depicts the 4 choices in Default Robots.

You need to choose one of the above. For pages related to Product, refer to the first page, and for development pages, the last one is suitable.

5. In the "Edit custom instruction" section, you can specifically add parts of the website using a code to allow or disallow:

Allow Full Access

The first choice lets all the bots and 3rd party widgets access all the information throughout the website.

User-agent: *

Disallow:

Disallow Access To ALL Folders

The second option disables the whole website. That is what happens when you get an error while attempting to open a website.

User-agent: *

Disallow: /

Default Instructions

The default instruction part lets you decide which parts are allowed and which are not. It is necessary to add (User-agent: *) to all three options.

Disallow: /lib/

Disallow: /*.php$

Disallow: /pkginfo/

Disallow: /report/

Disallow: /var/

Disallow: /catalog/

Disallow: /customer/

Disallow: /sendfriend/

Disallow: /review/

Disallow: /*SID=

6. Magento has some dynamically generated files which are unnecessary for users, and can be removed.

7. There are several tools or extensions for developing Robots.txt files for Magento websites by writing the text in a plain text data file or by using a 3rd party extension. Install them anytime and anywhere.

8. Meta Tags are another method for creating a Robot.txt file for Magento websites: .

Magento 2 Sitemap Configuration Techniques

As per recent recommendations, it is advisable for you to improve the Sitemap for Magento 2 as frequently as you can. When viewers see different pages popping up, it assures them that it is not a redundant website while engaging them by providing interactive landing pages. We will take you through the configuration technique steps one by one:

1. Click on the Sitemap Configuration tab in the Design options on the left panel;

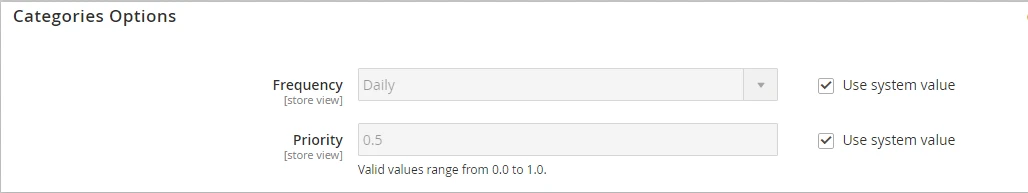

2. The Categories option will open up, then check through all the tabs provided;

3. Set the Frequency as per your convenience. The most commonly used are "Daily" and "Weekly";

4. Priority is next and ranges from 0.0 (lowest) to 1.0 (highest priority). Prioritize your category updates using this option;

5. Make some changes to your Product page where the Sitemap gives you the choices of including no/ base only/ all images. It is crucial to regulate the dimensions of the images added;

6. Optimize the CMS page Sitemap as and when necessary;

7. Several options exist to create Sitemap in Magento 2.

Advanced SEO Suite Extensions

Getting separate plugins or extensions to boost your website ranking can cost you a fortune. Therefore, Advanced SEO Suite Extensions come to your rescue.

You don't have to spend hundreds of dollars on SEO optimization. Instead, you’ll get access to a tool that can put SEO settings automatically.

You can actually enable it right now in just a few clicks. You can purchase the extension and begin your quest with some documentation online to attract traffic, get a better rank in the search engine, and have automatic inclusion of the technical elements.

Below are some of the characteristics to consider when getting started:

- Automatic SEO analysis using graphs and tables;

- Appropriate and attractive snippets;

- Rewriting of SEO meta tags and having the finest SEO templates;

- Sitemap generator in XML or HTML format included;

- Canonical URLs can be configured for the online store.

Final Thoughts

In short, Robots.txt and Sitemap files are crucial parts of the Magento eCommerce platform.

Use them to take control of what the GoogleCrawlers or other bots are watching. Also, avoid being robbed of data online by blocking sensitive information contained in the site developed.

When you give access to all your webpages, too many crawlers may hamper the server load and cause an error. Since you cannot control who is accessing the files, control the access to the files instead.

Advanced SEO Extensions are another great tool that can be explored. You can be safe from the duplication of webpages and theft of your ideas while jumping higher in rank. Contact Mirasvit to get the Advanced SEO Suite Extension for your amazing online portal and help regarding the bot and Sitemap files for your Magento 2 eCommerce platform!